Quantum limit

A quantum limit in physics is a limit on measurement accuracy at quantum scales.[1] Depending on the context, the limit may be absolute (such as the Heisenberg limit), or it may only apply when the experiment is conducted with naturally occurring quantum states (e.g. the standard quantum limit in interferometry) and can be circumvented with advanced state preparation and measurement schemes.

The usage of term standard quantum limit or SQL is, however, broader than just interferometry. In principle, any linear measurement of a quantum mechanical observable of a system under study that does not commute with itself at different times leads to such limits. In short, it is the Heisenberg uncertainty principle that is the cause .

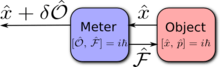

A more detailed explanation would be that any measurement in quantum mechanics involves at least two parties, an Object and a Meter. The former is the system which observable, say , we want to measure. The latter is the system we couple to the Object in order to infer the value of of the Object by recording some chosen observable, , of this system, e.g. the position of the pointer on a scale of the Meter. This, in a nutshell, is a model of most of the measurements happening in physics, known as indirect measurements (see pp. 38–42 of [1]). So any measurement is a result of interaction and that acts in both ways. Therefore, the Meter acts on the Object during each measurement, usually via the quantity, , conjugate to the readout observable , thus perturbing the value of measured observable and modifying the results of subsequent measurements. This is known as back action (quantum) of the Meter on the system under measurement.

At the same time, quantum mechanics prescribes that readout observable of the Meter should have an inherent uncertainty, , additive to and independent on the value of the measured quantity . This one is known as measurement imprecision or measurement noise. Because of the Heisenberg uncertainty principle, this imprecision cannot be arbitrary and is linked to the back-action perturbation by the uncertainty relation:

where is a standard deviation of observable and stands for expectation value of in whatever quantum state the system is. The equality is reached if the system is in a minimum uncertainty state. The consequence for our case is that the more precise is our measurement, i.e the smaller is , the larger will be perturbation the Meter exerts on the measured observable . Therefore, the readout of the meter will, in general, consist of three terms:

where is a value of that the Object would have, were it not coupled to the Meter, and is the perturbation to the value of caused by back action force, . The uncertainty of the latter is proportional to . Thus, there is a minimal value, or the limit to the precision one can get in such a measurement, provided that and are uncorrelated [2] .[3]

The terms "quantum limit" and "standard quantum limit" are sometimes used interchangeably. Usually, "quantum limit" is a general term which refers to any restriction on measurement due to quantum effects, while the "standard quantum limit" in any given context refers to a quantum limit which is ubiquitous in that context.

Examples

Displacement measurement

Let us consider a very simple measurement scheme, which, nevertheless, embodies all key features of a general position measurement. In the scheme shown in Figure, a sequence of very short light pulses are used to monitor the displacement of a probe body . The position of is probed periodically with time interval . We assume mass large enough to neglect the displacement inflicted by the pulses regular (classical) radiation pressure in the course of measurement process.

Then each -th pulse, when reflected, carries a phase shift proportional to the value of the test-mass position at the moment of reflection:

-

(1)

where , is the light frequency, is the pulse number and is the initial (random) phase of the -th pulse. We assume that the mean value of all these phases is equal to zero, , and their root mean square (RMS) uncertainty is equal to .

The reflected pulses are detected by a phase-sensitive device (the phase detector). The implementation of an optical phase detector can be done using, e.g. homodyne or heterodyne detection scheme (see Section 2.3 in [2] and references therein), or other such read-out techniques.

In this example, light pulse phase serves as the readout observable of the Meter. Then we suppose that the phase measurement error introduced by the detector is much smaller than the initial uncertainty of the phases . In this case, the initial uncertainty will be the only source of the position measurement error:

-

(2)

For convenience, we renormalise Eq. (1) as the equivalent test-mass displacement:

-

(3)

where

are the independent random values with the RMS uncertainties given by Eq. (2).

Upon reflection, each light pulse kicks the test mass, transferring to it a back-action momentum equal to

-

(4)

where and are the test-mass momentum values just before and just after the light pulse reflection, and is the energy of the -th pulse, that plays the role of back action observable of the Meter. The major part of this perturbation is contributed by classical radiation pressure:

with the mean energy of the pulses. Therefore, one could neglect its effect, for it could be either subtracted from the measurement result or compensated by an actuator. The random part, which cannot be compensated, is proportional to the deviation of the pulse energy:

and its RMS uncertainly is equal to

-

(5)

with the RMS uncertainty of the pulse energy.

Assuming the mirror is free (which is a fair approximation if time interval between pulses is much shorter than the period of suspended mirror oscillations, ), one can estimate an additional displacement caused by the back action of the -th pulse that will contribute to the uncertainty of the subsequent measurement by the pulse time later:

Its uncertainty will be simply

If we now want to estimate how much has the mirror moved between the and pulses, i.e. its displacement , we will have to deal with three additional uncertainties that limit precision of our estimate:

where we assumed all contributions to our measurement uncertainty statistically independent and thus got sum uncertainty by summation of standard deviations. If we further assume that all light pulses are similar and have the same phase uncertainty, thence .

Now, what is the minimum this sum and what is the minimum error one can get in this simple estimate? The answer ensues from quantum mechanics, if we recall that energy and the phase of each pulse are canonically conjugate observables and thus obey the following uncertainty relation:

Therefore, it follows from Eqs. (2 and 5) that the position measurement error and the momentum perturbation due to back action also satisfy the uncertainty relation:

Taking this relation into account, the minimal uncertainty, , the light pulse should have in order not to perturb the mirror too much, should be equal to yielding for both . Thus the minimal displacement measurement error that is prescribed by quantum mechanics read:

This is the Standard Quantum Limit for such a 2-pulse procedure. In principle, if we limit our measurement to two pulses only and do not care about perturbing mirror position afterwards, the second pulse measurement uncertainty, , can, in theory, be reduced to 0 (it will yield, of course, ) and the limit of displacement measurement error will reduce to:

which is known as the Standard Quantum Limit for the measurement of free mass displacement.

This example represents a simple particular case of a linear measurement. This class of measurement schemes can be fully described by two linear equations of the form~(3) and (4), provided that both the measurement uncertainty and the object back-action perturbation ( and in this case) are statistically independent of the test object initial quantum state and satisfy the same uncertainty relation as the measured observable and its canonically conjugate counterpart (the object position and momentum in this case).

Usage in quantum optics

In the context of interferometry or other optical measurements, the standard quantum limit usually refers to the minimum level of quantum noise which is obtainable without squeezed states.[4]

There is additionally a quantum limit for phase noise, reachable only by a laser at high noise frequencies.

In spectroscopy, the shortest wavelength in an X-ray spectrum is called the quantum limit.[5]

Misleading relation to the classical limit

Note that due to an overloading of the word "limit", the classical limit is not the opposite of the quantum limit. In "quantum limit", "limit" is being used in the sense of a physical limitation (e.g. the Armstrong limit). In "classical limit", "limit" is used in the sense of a limiting process. (Note that there is no simple rigorous mathematical limit which fully recovers classical mechanics from quantum mechanics, the Ehrenfest theorem notwithstanding. Nevertheless, in the phase space formulation of quantum mechanics, such limits are more systematic and practical.)

See also

References and Notes

- 1 2 Braginsky, V .B.; Khalili, F. Ya. (1992). Quantum Measurement. Cambridge University Press. ISBN 978-0521484138.

- 1 2 Danilishin, S. L.; Khalili F. Ya. (2012). "Quantum Measurement Theory in Gravitational-Wave Detectors". Living Reviews in Relativity (5): 60. arXiv:1203.1706

. Bibcode:2012LRR....15....5D. doi:10.12942/lrr-2012-5.

. Bibcode:2012LRR....15....5D. doi:10.12942/lrr-2012-5. - ↑ Chen, Yanbei (2013). "Macroscopic quantum mechanics: theory and experimental concepts of optomechanics". J. Phys. B: At. Mol. Opt. Phys. 46: 104001. arXiv:1302.1924

. Bibcode:2013JPhB...46j4001C. doi:10.1088/0953-4075/46/10/104001.

. Bibcode:2013JPhB...46j4001C. doi:10.1088/0953-4075/46/10/104001. - ↑ Jaekel, M. T.; Reynaud, S. (1990). "Quantum Limits in Interferometric Measurements". Europhysics Letters. 13 (4): 301. arXiv:quant-ph/0101104

. Bibcode:1990EL.....13..301J. doi:10.1209/0295-5075/13/4/003.

. Bibcode:1990EL.....13..301J. doi:10.1209/0295-5075/13/4/003. - ↑ Piston, D. S. (1936). "The Polarization of X-Rays from Thin Targets". Physical Review. 49 (4): 275. Bibcode:1936PhRv...49..275P. doi:10.1103/PhysRev.49.275.